LinkedIn, the popular platform for job seekers, has developed Apache Kafka to meet the growing business demands. It has high-throughput, low-latency systems like our web hosting servers and handles volumes of real-time event data. It is built on Java and Scala. Later, Kafka was donated to the Apache Software Foundation after making it an open-source, distributed event store and stream-processing platform.

Table Of Content

The Origin of Apache Kafka

LinkedIn needed a way to process massive amounts of event data from sources such as profile changes, status updates, and user clicks in real time.

So, they founded Apache Kafka. Using Kafka, LinkedIn manages billions of events every day, enabling the rapid flow of data and real-time insights. This allowed LinkedIn to enhance the user experience better by providing more personalized content and suggestions. Kafka’s robust architecture also ensured data reliability and scalability, which was essential for LinkedIn’s growing user base.

Kafka retains messages after consumption, unlike conventional message queues that remove them after consumption, so multiple consumers can access the same data independently. As a result, Kafka is ideal for messaging, event sourcing, stream processing, and building real-time data pipelines.

How Does Apache Kafka Work?

As a distributed platform, Kafka operates as a fault-tolerant cluster that spans multiple servers and even multiple data centers.

Kafka has three primary capabilities:

- It enables applications to publish or subscribe to data or event streams.

- It stores records accurately in the order they occurred, with fault-tolerant and durable storage.

- It processes records in real-time as they occur.

In topics named logs, records are stored in the order in which they are produced by producers (applications or topics). A cluster of Kafka brokers (servers) distributes topics into partitions.

Kafka maintains the order of records within each partition and stores them durably on disk for a configurable period of time. Within a partition, ordering is guaranteed, but not across partitions. Depending on the application’s needs, consumers can read from these partitions in real time or from a specific offset.

Kafka ensures reliability by replicating partitions. A partition has a leader (on one broker) and one or more followers (replicas) on other brokers. This replication helps tolerate node failures without data loss.

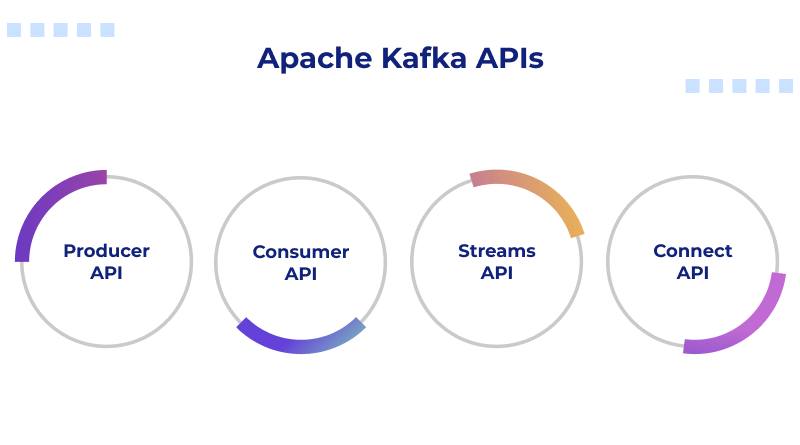

Apache Kafka APIs

There is a set of core Apache Kafka KPIs to enable the interaction between the distributed platform.

- Producer API: The API allows applications to publish a stream of records to one or more Kafka topics. Producers are responsible for sending data to Kafka brokers.

- Consumer API: Consumer APIs enable subscribers to read one or more topics and process the stream of records produced to them. It fetches and processes data from Kafka topics.

- Streams API: Streams API builds stream processing apps and microservices. It gives the continuous computation and manipulation of data streams, including transformations, aggregations, joins, and windowing operations.

- Connect API: The API allows developers to build connectors; they are reusable producer or consumer APIs to simplify and automate the data source integration into a Kafka cluster.

Benefits of Apache Kafka

1. Processing Speed

Kafka implements a data processing system with brokers, topics, and APIs, outperforming both SQL and NoSQL database storage. It leverages the horizontal scalability of hardware resources in multi-node clusters that are positioned across multiple data center locations. In benchmarks, Kafka outperforms Pulsar & RabbitMQ with lower latency in delivering real-time data across a streaming data architecture.

2. Platform Scalability

Apache Kafka was built to overcome the high latency with the batch queue processing using RabbitMQ at the scale of the world’s largest websites. Apache Kafka is mostly preferred over Apache Hadoop due to the latency difference. New-age enterprises need cloud hosting servers, advanced fraud detection with SSL, and instant inventory updates. All these things are possible with Kafka’s low-latency design.

3. Pre-Built Integrators

Kafka Connect offers 120+ pre-built connectors sourced from developers, partners, and ecosystem companies. These integrators interact with specific systems. Examples include integration with Amazon S3 storage, Google BigQuery, Elasticsearch, MongoDB, Redis, Azure Cosmos DB, AEP, SAP, Splunk, and DataDog. These integrators accelerate the application development with the support of organizational requirements.

4. Managed Cloud

Apache Kafka needs a third-party managed cloud service with KSQL DB integration to manage resources. Kafka needs a place to keep all the data. Tiered storage automatically moves older, less-used data to cheaper, slower storage while keeping new, frequently accessed data on fast storage. This means the system can run and be managed smoothly across different cloud providers, giving businesses more flexibility.

5. Enterprise Security

Fortune 500 companies use Apache Kafka, which provides robust security measures. The software includes Role-Based Access Control (RBAC) and Secret Protection for passwords. Structured audit logs allow for the tracing of cloud events to enact security protocols that protect networks from scripted hacking, account penetration, and DDoS attacks.

Apache Kafka Ecosystem Components

Apache Spark: A fast, unified analytics engine that is often used to process and analyze workflows on Kafka’s real-time streams of data and runs batch and stream processing.

- Apache NiFi: A user-friendly data routing and transformation application that makes it simple to move data into or out of Kafka using flow-based programming.

- Apache Flink: A great stream-processing framework built for high-throughput, low-latency applications, often used for applications processing complex events from streams coming from Kafka.

- Apache Hadoop: The original distributed storage and processing framework, well known as the long-term data lake for historical data offloaded from a Kafka topic.

- Apache Camel: An integration framework to connect Kafka with a myriad of systems and protocols and boasts a library of components to help with that.

- Apache Cassandra: A highly available distributed NoSQL database often used to store data for applications consuming topics from Kafka.

In essence, Apache Kafka is a distributed and powerful event streaming platform providing a highly scalable, fault-tolerant, and durable solution for handling massive volumes of real-time data. It acts as the central nervous system for modern data architectures, enabling systems to publish, store, and process streams of records as they occur.

FAQs

1. What problem does Kafka primarily solve for businesses?

Kafka is mainly a solution for reliably processing real-time data streams with high throughput, which enables organizations to link very different systems with a central and scalable data pipeline.

2.Is Kafka a message queue like other queues, or something different?

Instead of being a message queue like others, Kafka is a distributed streaming platform that retains messages in a persistent and ordered log, while other message queues may not retain messages and are typically transient.

3. What are the main components of Kafka architecture?

The main components of Kafka architecture are producers, brokers (the servers), consumers, and ZooKeeper (or a built-in consensus algorithm, such as KRaft).

4. What does a Kafka topic vs. partition look like?

A topic represents a category of records, which is partitioned into ordered and fault-tolerant partitions to enable parallel read/write capabilities and hence scalability.